2024 December

OCI Cloud Shell - Import Data Pump Dump Files into Autonomous Database

As discussed in my other articles, there are various ways to import data pump dump files into an autonomous database including using Import button DB Actions on the OCI Console or by using SQL Developer as seen in this blog post. In this guide, I will demonstrate how to import data into an autonomous database on OCI using the cloud shell on the OCI Console.

To demonstrate this, we'll have an Oracle Data pump dump file taken from a source database and uploaded into an Object storage bucket in OCI. We will have also provisioned an Oracle Autonomous database instance (the target database) and assume that the landing zone (networking, compartments, policies etc) have been set up.

Pre-requisites to have for a successful migration exercise

A data pump exort dump file 'EXPDAT01-13_53_25.DMP'. We'll import the HR schema

A Storage Bucket in OCI where the export dump file has been uploaded

A Target Autonomous Transaction (ATP) Database.

Cloud Shell terminal (OCI Console)

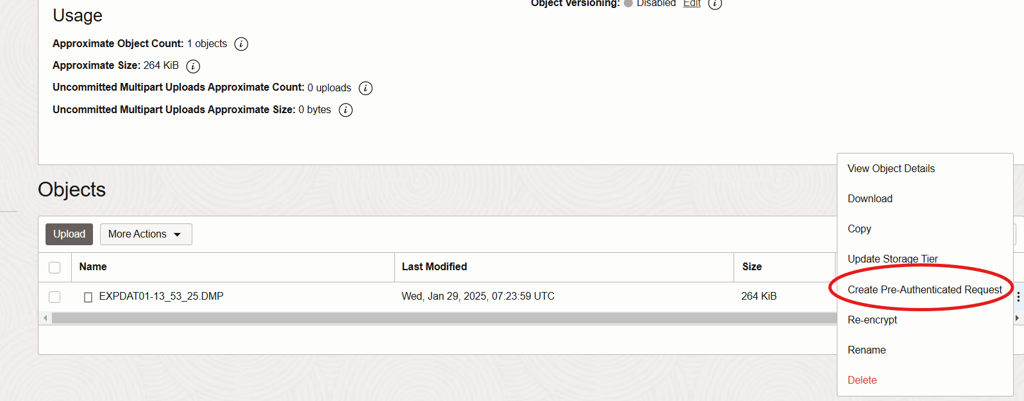

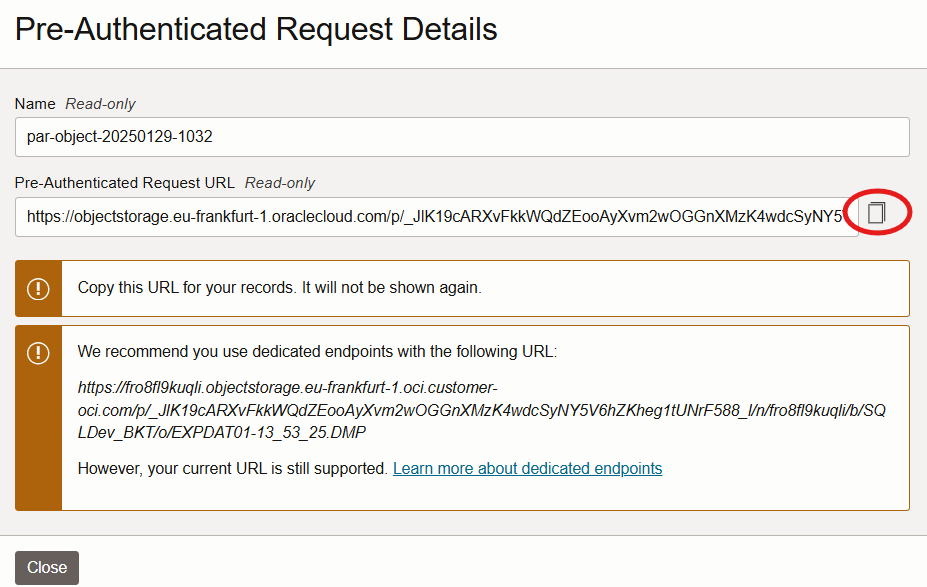

We will first need to create a pre-authenticated request of the dump file in the object storage to allow access by the DB to this dump file. In the SQLDev_BKT, locate the EXPDAT01-13_53_25.DMP dump file and click on the three buttons on the extreme right and select 'Create Pre-Authenticated Request'

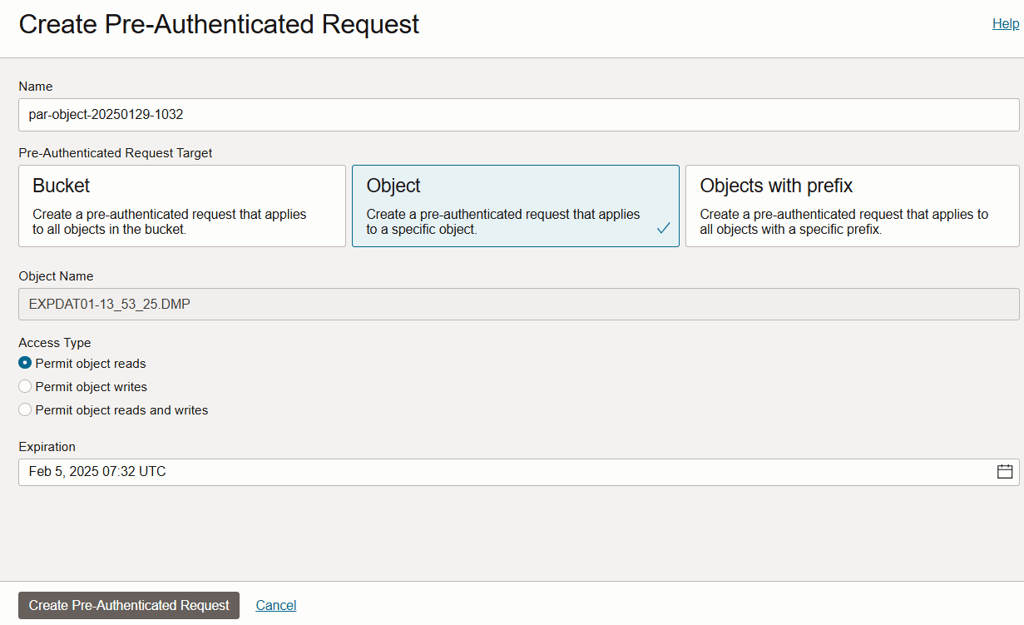

For security purposes, be careful to only create pre-authenticated requests for only the objects desired, in this case, only our dump file, however you can also create one for an entire bucket, which comes in handy when you have more than one dump file. Leave everything else as the default and click to create.

Copy the pre-authenticated URL generated on some text pad somewhere. We'll need this later in the exercise.

Next we need to create a credential in the autonomous database that will be used to authenticate to the dump file in the object storage using the URL above. Without this, the autonomous database and thus IMPDP isn't able to access the dump file. You create the credential using the DBMS_CLOUD.CREATE_CREDENTIAL procedure using the below syntax;

BEGIN

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'credential_name',

username => '******@***.com',

password => 'auth_token' );

END;

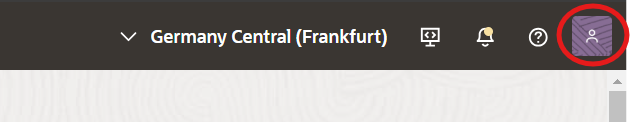

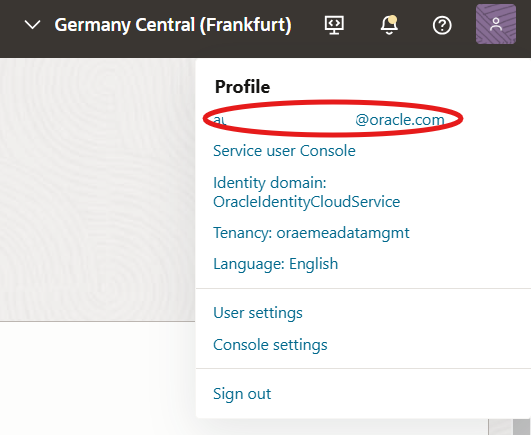

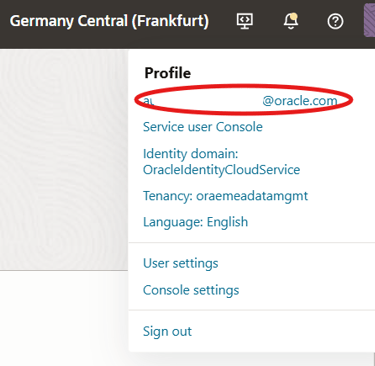

As you can see, we'll need the above parameters before we can create our credential. The name can be anything you wish to name your credential. In this case, we'll call our 'ADB_CREDENTIAL'. Our username is the logged user on OCI who's going to perform the import job. Click your profile tab on the extreme right at the top of the OCI console as shown below.

Then click on your username, which is your email address under the profile

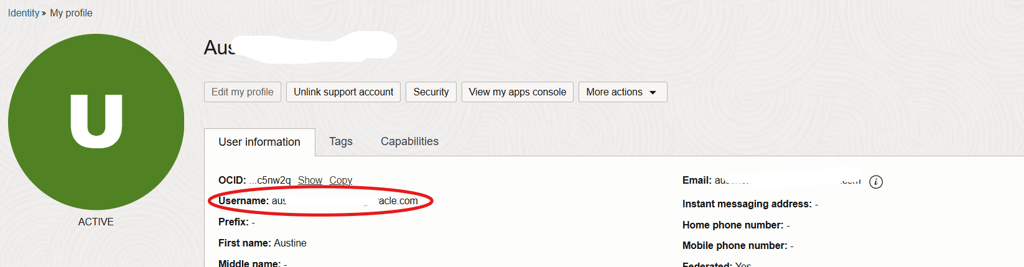

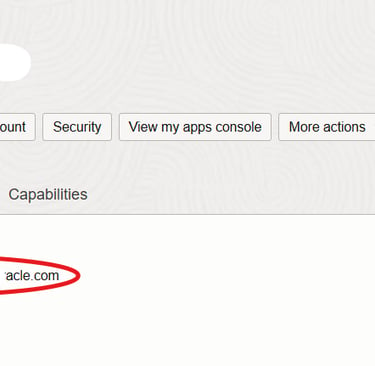

You can see your username below. Take note of that, we'll need it to create our credential.

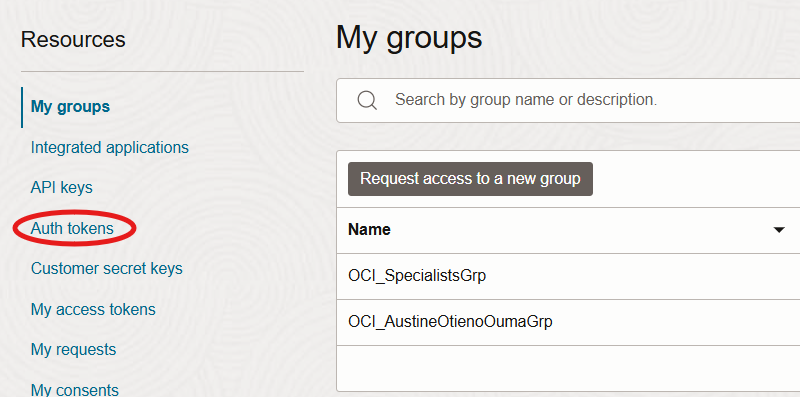

Next we'll generate the password needed by the credential. To do that, under 'Resources' in our profile, click on 'Auth Tokens'. This is on the same page above, where we identified our username.

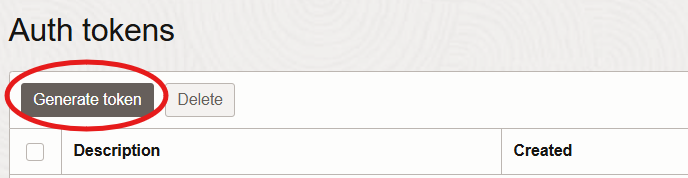

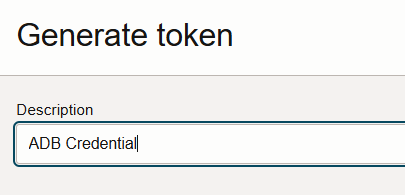

Click the 'Generate Token' button to generate a token and give a description of the token you're generating as shown below.

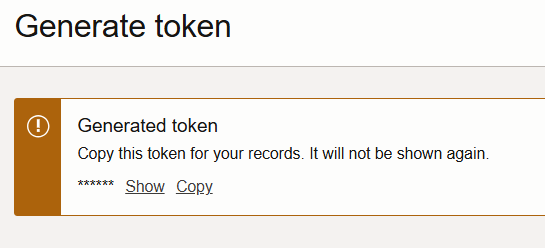

Take note that the credential is only shown once. Copy it in some text pad somewhere as we'll need it also to create our credential.

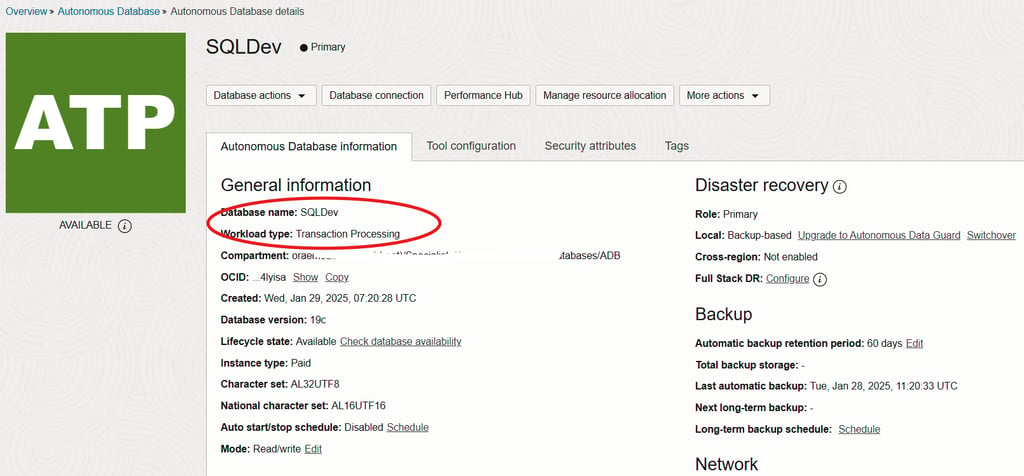

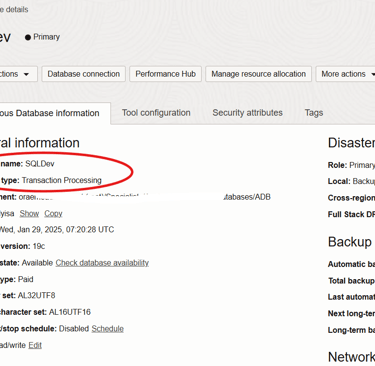

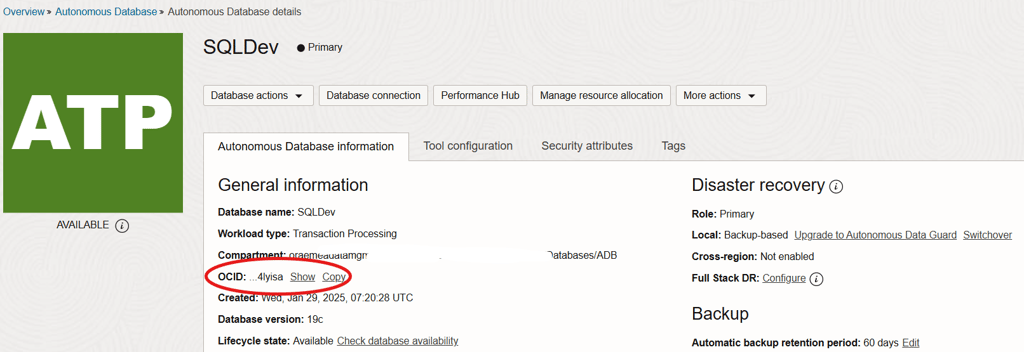

Now that we have all the necessary parameters to create our credential, we're going to log in to our target autonomous database instance to create the credential. We could do this in the cloud shell, or SQL Developer Web on the console, however since we're using the local SQL Developer in this exercise, I might as well execute the CREATE_CREDENTIAL procedure there. Before I do this, let's look at some details of our target ADB instance. As mentioned before, the DB name is SQLDev and of type Transaction Processing.

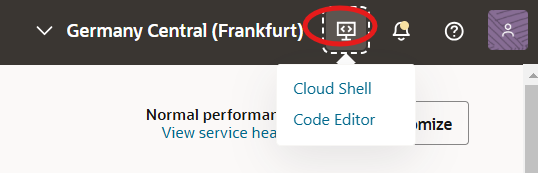

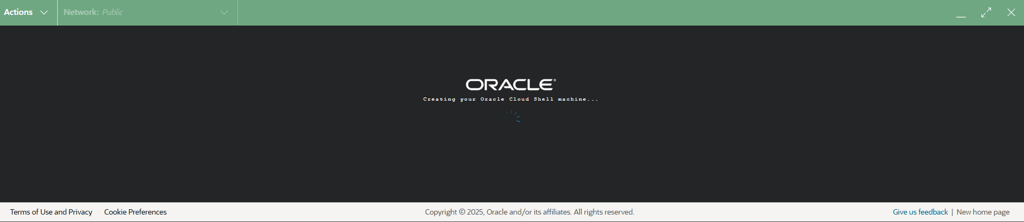

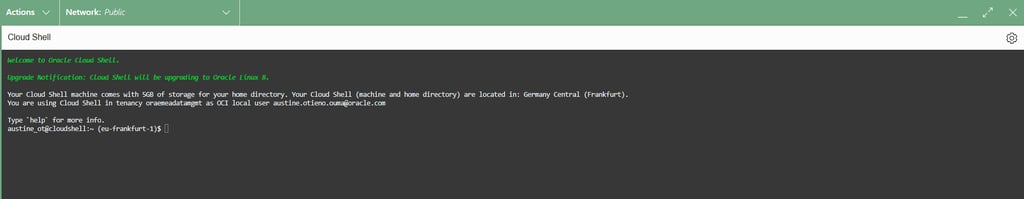

Next we launch the Shell on the OCI console and set it up to point to the target database as well as configure it ready for the import job. Click on the 'Developer Tools' as shown below and then click on 'Cloud Shell' to launch the Cloud Shell terminal

The cloud shell window will be launched at the bottom of your OCI Console page. If run for the first time, it'll take a few more minutes to set itself up for the first run. You can minimize, maximize or hide this window allowing you to continue working on the OCI console.

Next we locate the OCID of our Autonomous Database. You can get that on the General Information page as show here. Copy it to same text pad some where as well need it later.

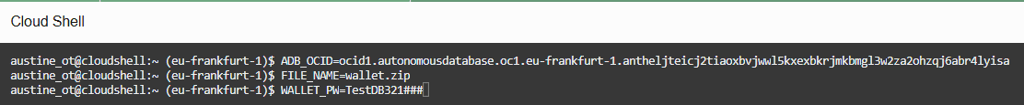

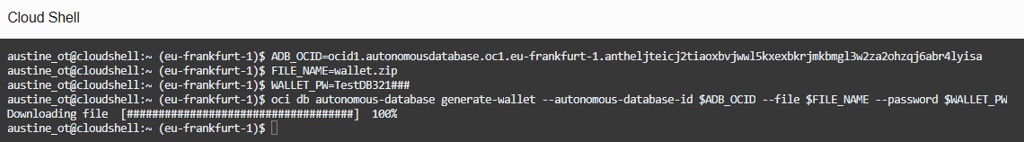

On the shell prompt, we'll now generate a wallet with this information. Using variables, we'll also supply the wallet name and the wallet password. We'll then run the > oci command to generate this wallet.

Run the > oci autonomous-database generate wallet command to generate this wallet using the variables above.

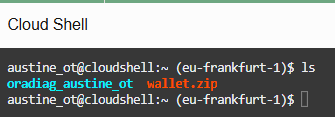

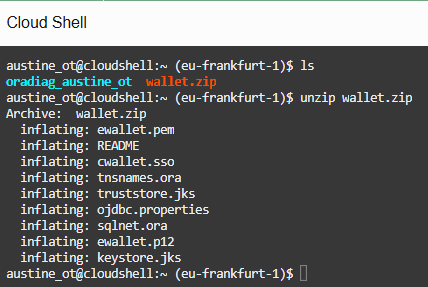

After running the command, you'll find a zipped wallet file under the name specified with the FILE_NAME variable. Unzip this file.

When the file is unzipped you'll notice a couple of files in your home directory including wallet files, tnsnames.ora and sqlnet.ora files. You can edit some of these files as you deem appropriate for your environment.

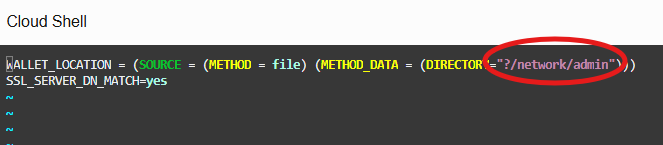

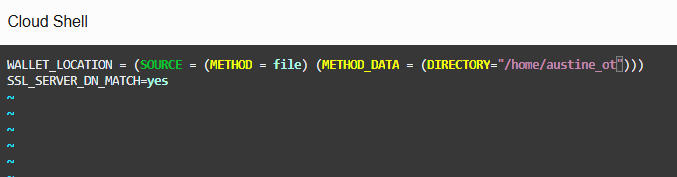

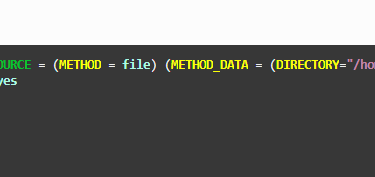

Edit the sqlnet.ora file to point to your home directory, in my case, '/home/austine_ot', which is where your configuration files have been unzipped. Replace the '?/network/admin' entry with your home directory or preferred location. Be careful not to delete the double quotes. Save the file entries and exit. You can have these files in any directory you wish.

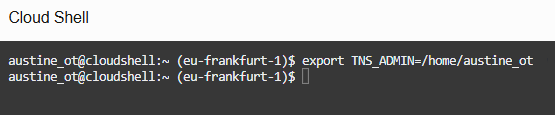

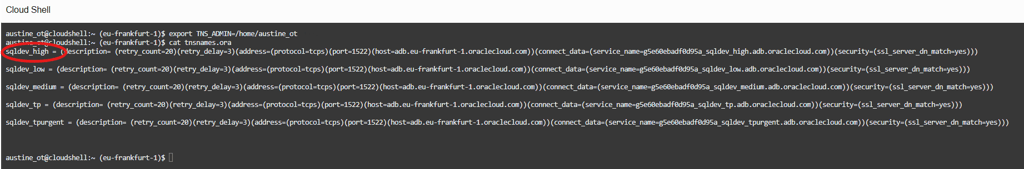

Export the TNS_ADMIN variable to this home directory.

You can view the contents of the tnsnames.ora file. Oracle recommends for data pump operations, you use the *_high service which is what we'll use in this guide.

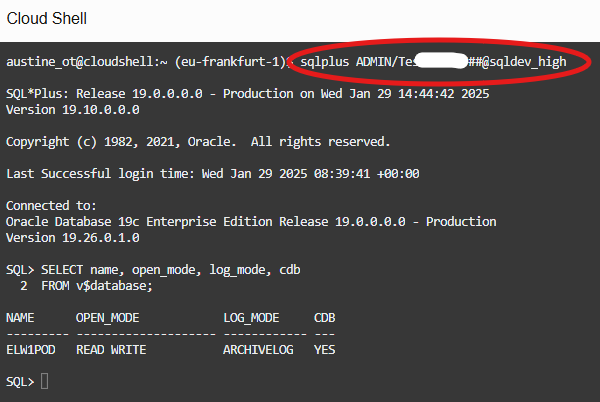

Let's try log in to SQL and query the database using that service name to test connectivity. We'll use this same service with IMPDP.

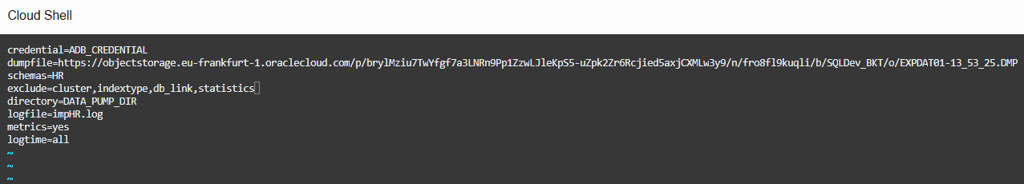

We'll now create our import parameter file (imp.par) with the import parameters to perform the data pump import job. You can add more parameters to suit your requirements. In the 'dumpfile' parameter, enter the pre-authenticated request URI we generated earlier in the guide. We still can't run the import yet as we haven't created the Database Credential yet. We'll do that next.

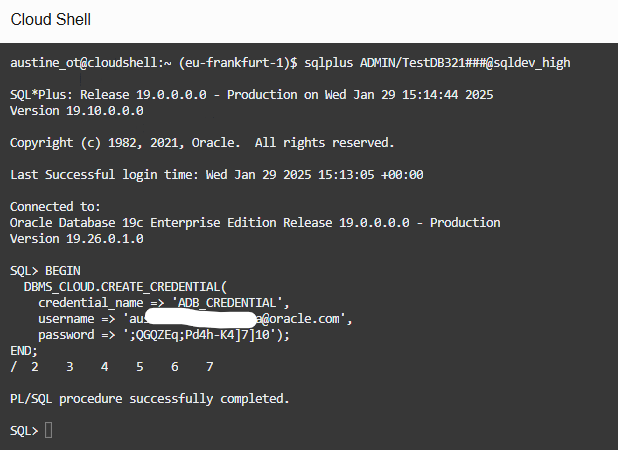

Log into sqlplus and execute the DBMS_CLOUD.CREATE_CREDENTIAL procedure with the username and auth token we saw earlier in the guide.

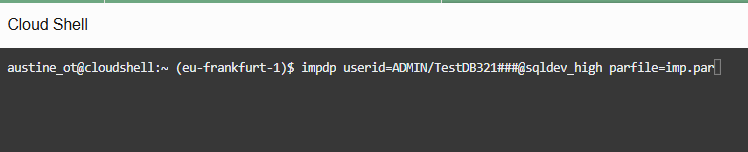

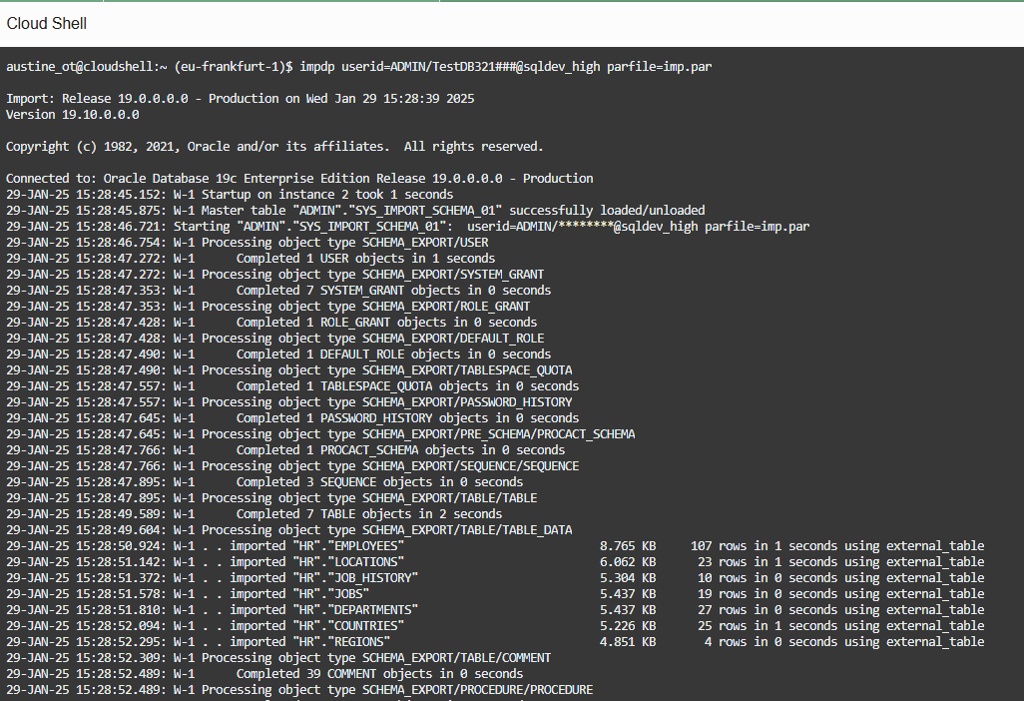

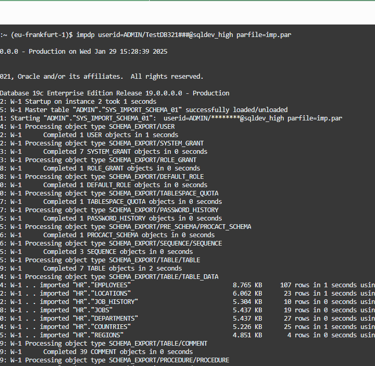

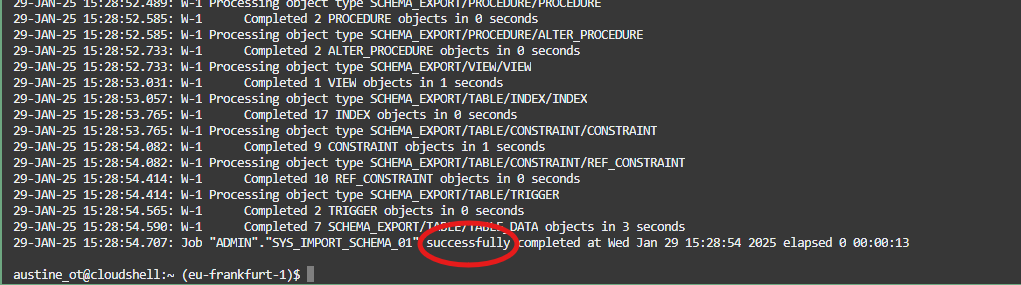

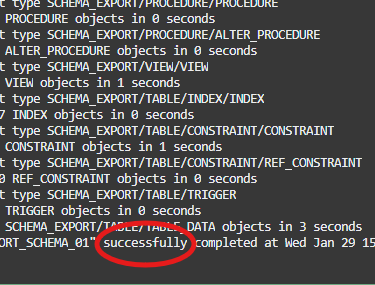

With the credentials and parameter file created, we are now ready to initiate the import job. On the shell terminal execute IMPDP along with the parameter file.

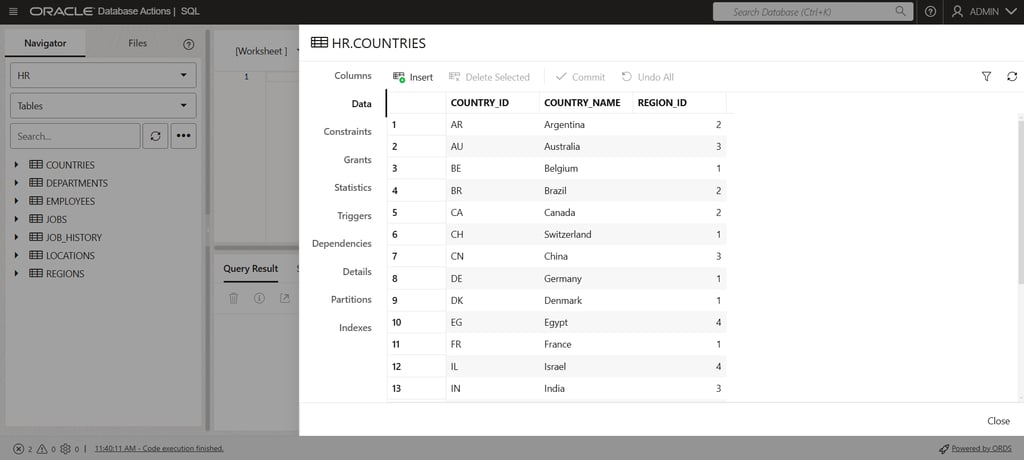

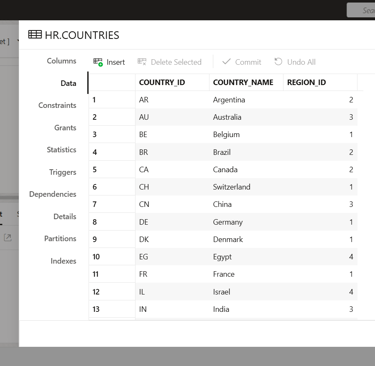

Confirm that the import job ended successfully and then check if you can query the imported data on the autonomous database.

On the OCI console, DB Actions, you can query and view the HR schema as seen below which further proves your import job was successful.

That's it! Hope you found this helpful.

Cloud Shell Import Dumps into ADB

Import Data Pump Dump Files into an Autonomous Database Instance using the Cloud Shell on OCI

Austine Ouma

12/27/2024