2025 June

Autonomous Database - Data Pump Import Wizard

Data Pump Import from the OCI Console?

From the OCI console, it is possible to execute Data Pump Import jobs directly into an Autonomous Database. Currently, this feature is limited to Import jobs only; exports aren't available ... yet. For those who've used SQL Developer to execute Data Pump import jobs into an Autonomous Database, as I demonstrated here, Database Actions (formerly SQL Developer Web) on the OCI console will look quite similar. Database Actions, just like the traditional SQL Developer on your laptop, brings you your favorite Oracle Database desktop tool's features and experience to your browser. It is recommended to go through this guide to ensure you've set up correctly for this exercise.

We'll look at how to perform data pump import jobs into an Autonomous Database from the OCI Console using Database Actions. Prerequisites to this exercise are the availability of;

A running autonomous database instance

An object storage bucket

A data pump export dumpfile in the object storage bucket.

Landing Zone with compartments, networks, policies & access privileges configured

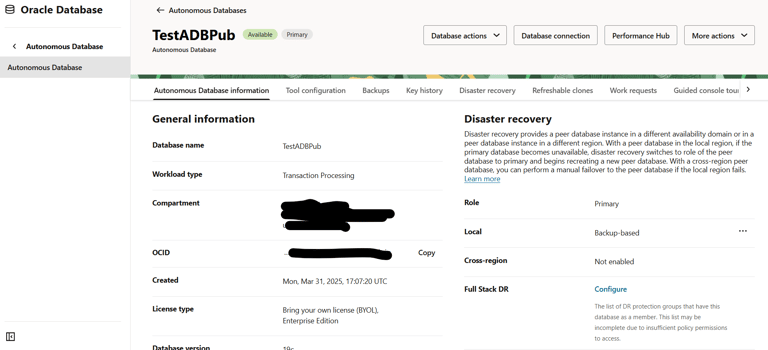

Here is a snippet of my target autonomous database running. This is a basic 19c Autonomous Transaction Processing (ATP) Instance

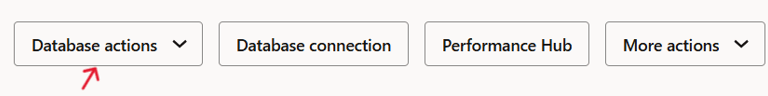

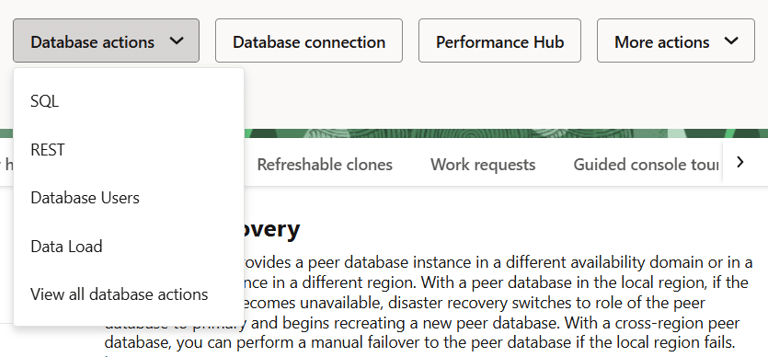

Click on Database Actions to launch it on a separate page.

Under Database Actions you'll see Database Management tools such as SQL, an interface where you can execute SQL commands, under Database Users you can manager database users, creating, dropping them or assigning roles and privileges and roles. Click on 'View all database actions'.

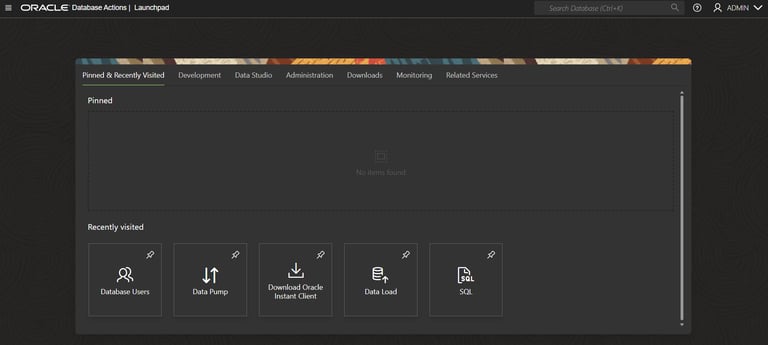

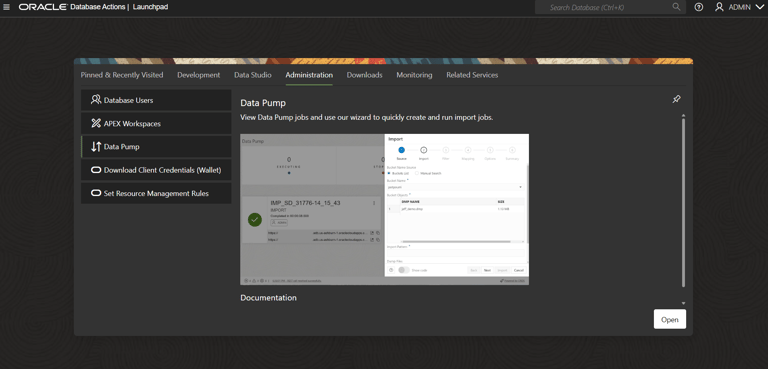

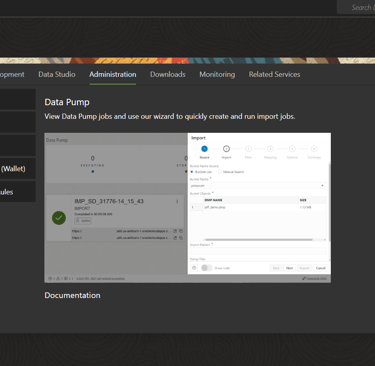

That opens the below window that offers a launchpad for several database administration tasks such as development, monitoring etc. If you had worked with data pump earlier, you can launch it again from the 'Recently Visited' tab at the bottom of the screen. If not, you can click on the Ádministration' tab and launch data pump from there.

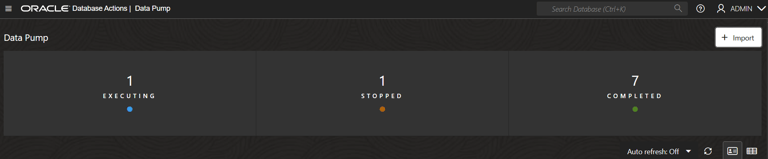

Clicking on Data Pump opens the Data Pump Wizard from which you can perform data pump import jobs as well as monitor running jobs and view the dump files and log files.

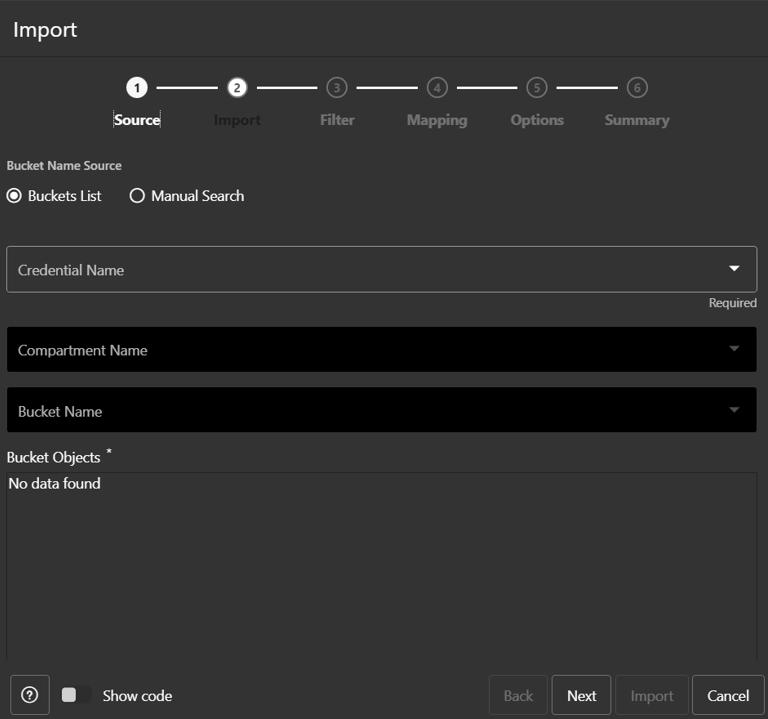

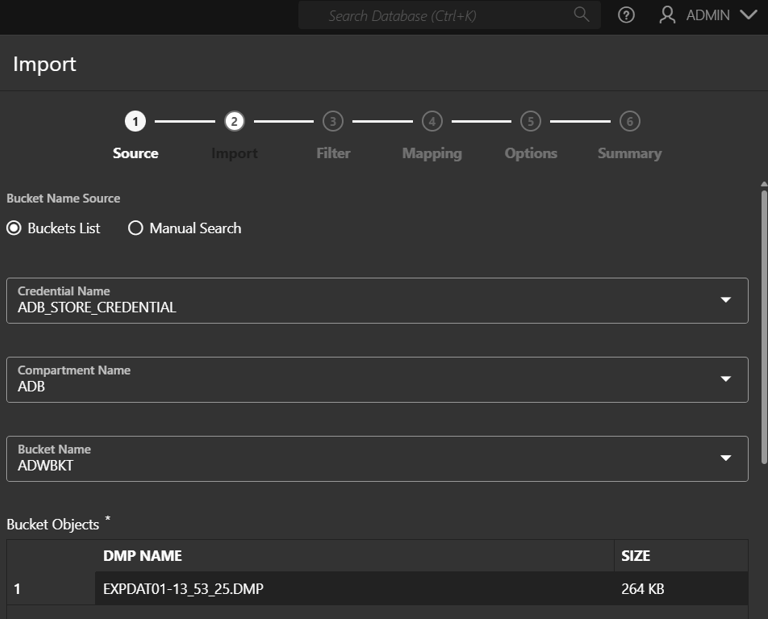

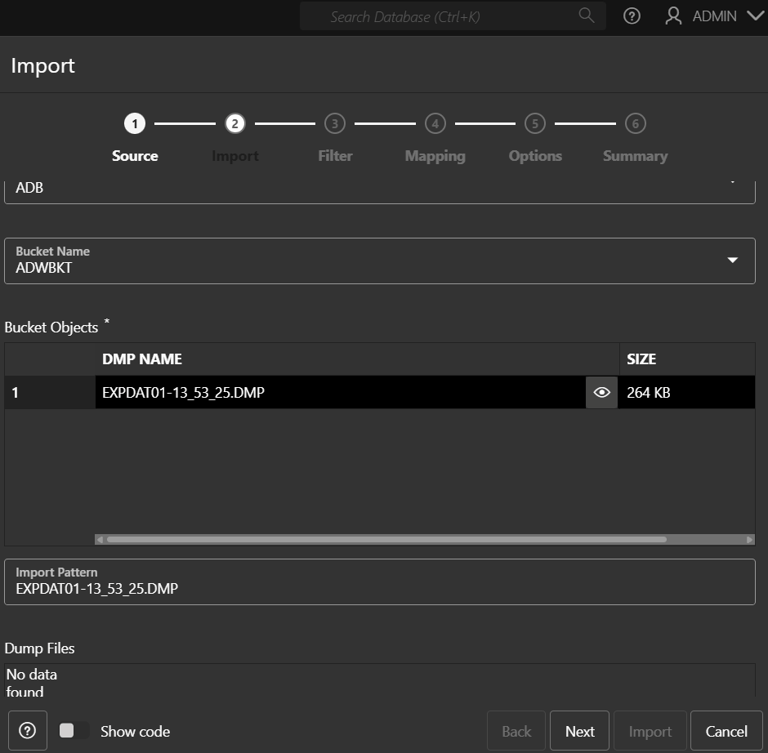

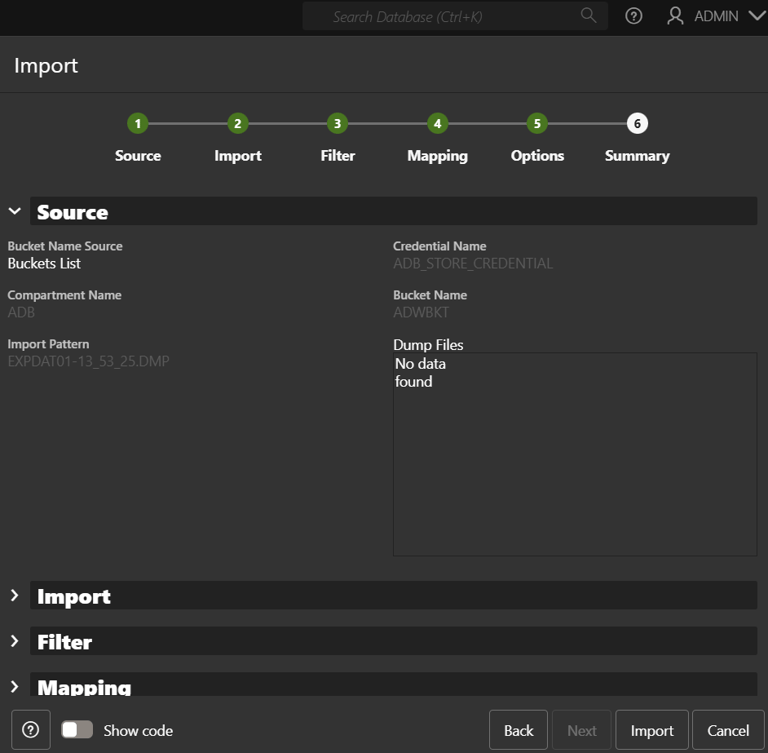

The Import Wizard offers a workflow to perform the data pump import job starting with locating the dump files in object storage through filtering schema based or full database jobs. This will look pretty familiar to those who've used Oracle's SQL Developer to perform data pump jobs.

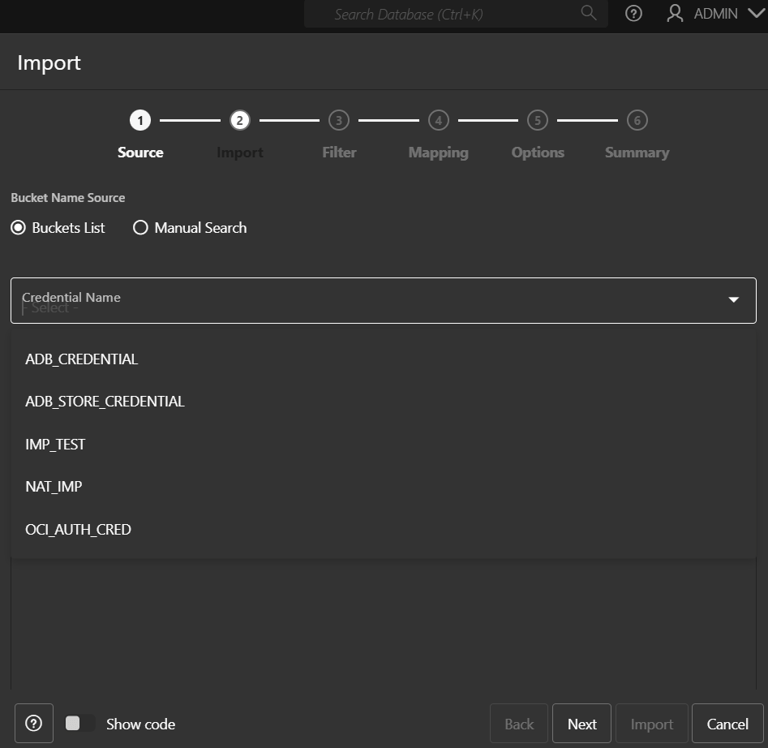

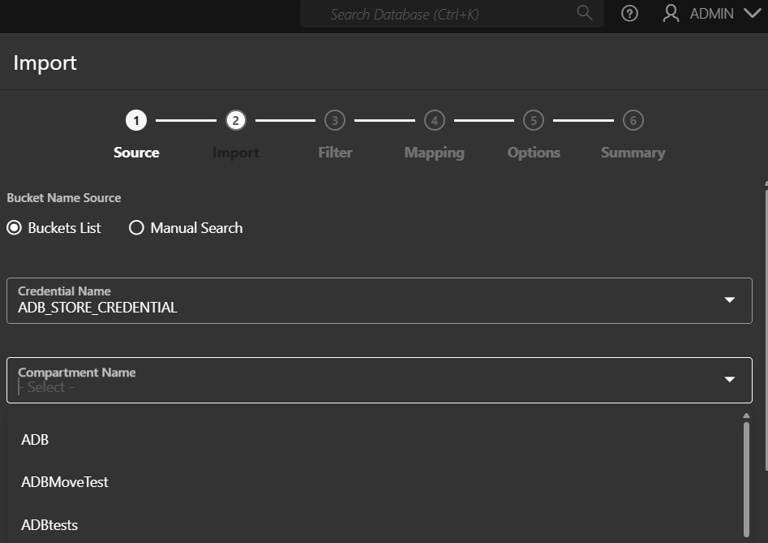

Select the database credentials created earlier in the process prerequisites.

Pick the relevant compartment where you have your bucket.

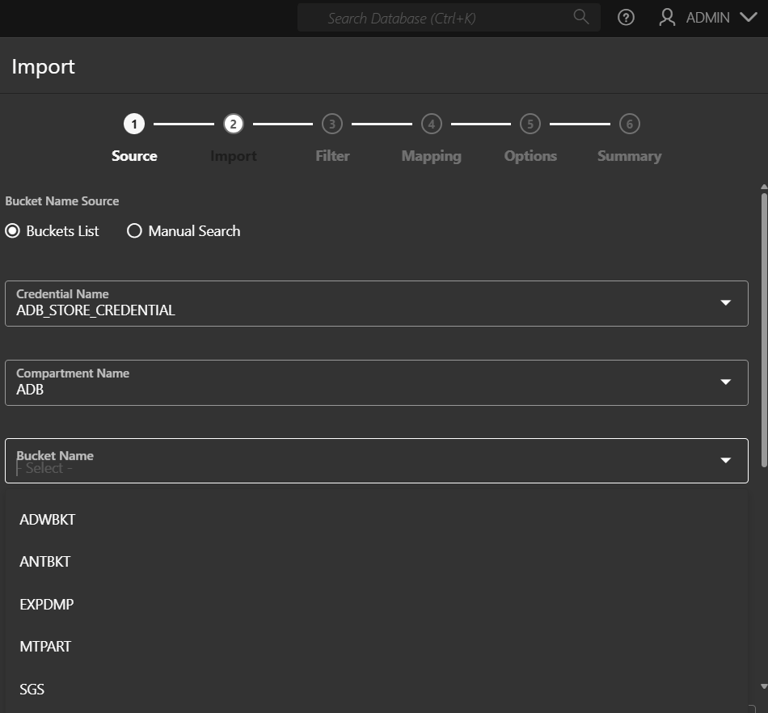

Select the bucket

Select the dump file(s). You can further refine the dump file list by using the import patterns i.e., EXPDAT%, to only show dump files beginning with the EXPDAT keyword and then click next.

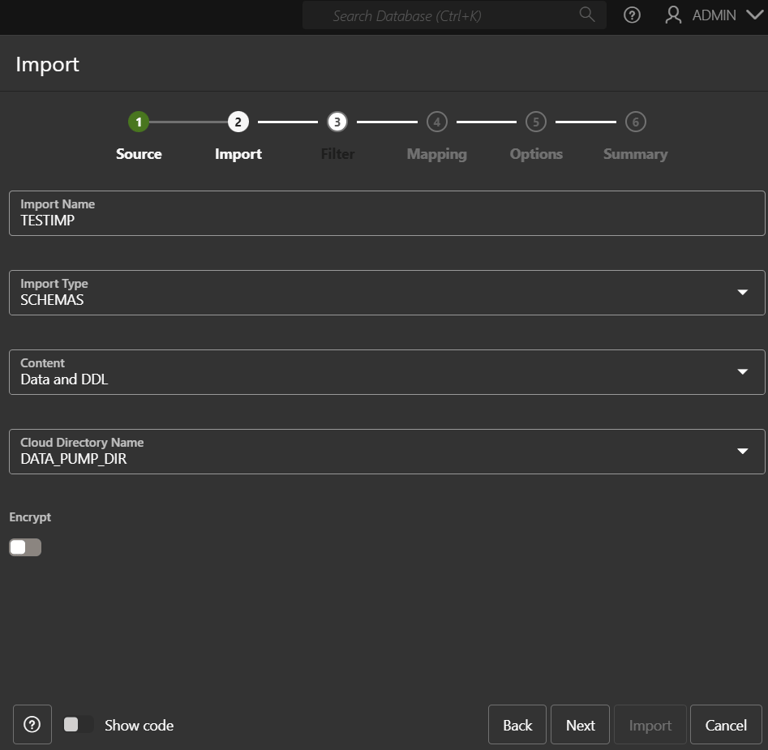

On the Import page, enter the job name, the type of import job to be executed, Oracle recommends schema-based jobs. Enter the content type as well as the directory name to be used.

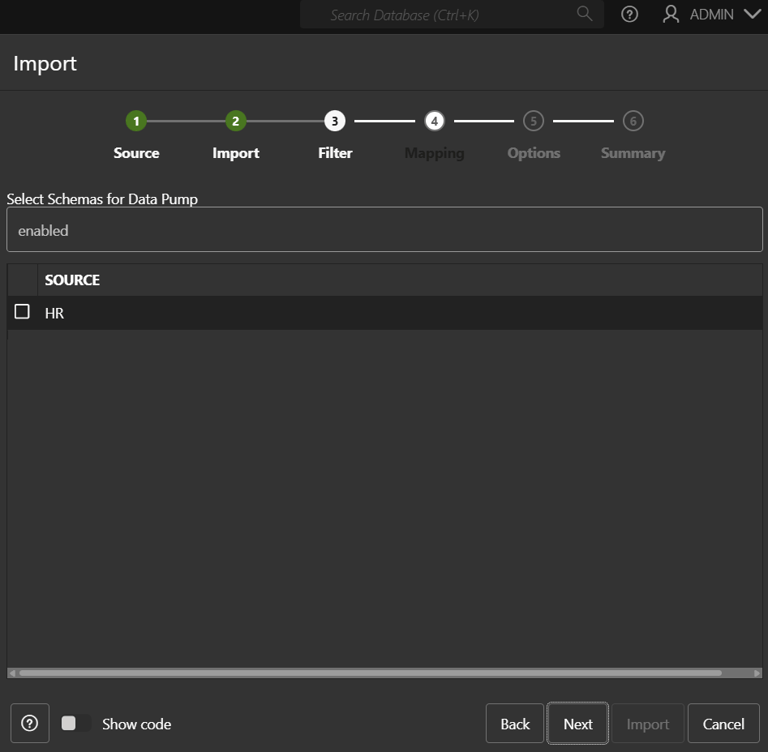

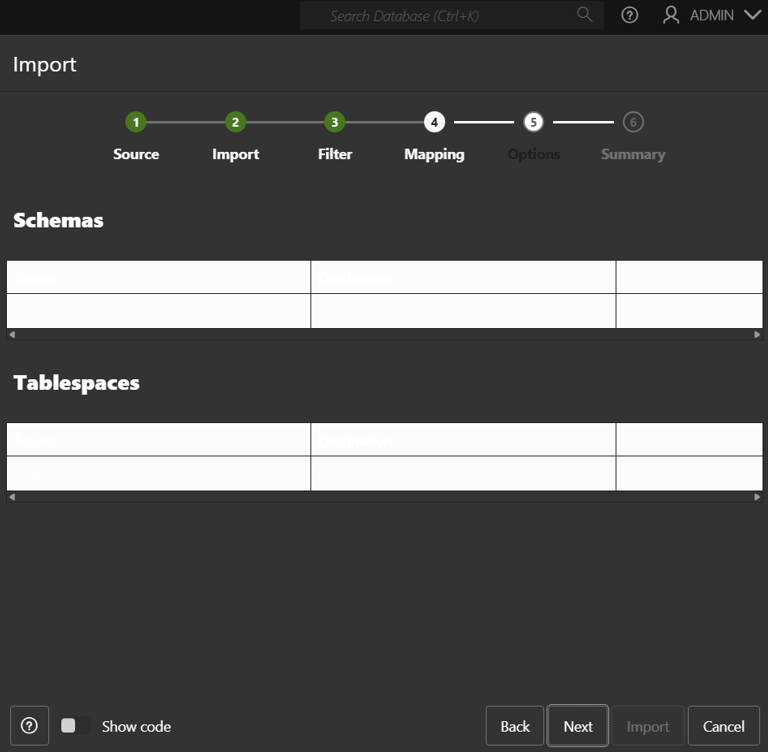

Select the schemas to import. You can remap schemas and tablespaces as necessary

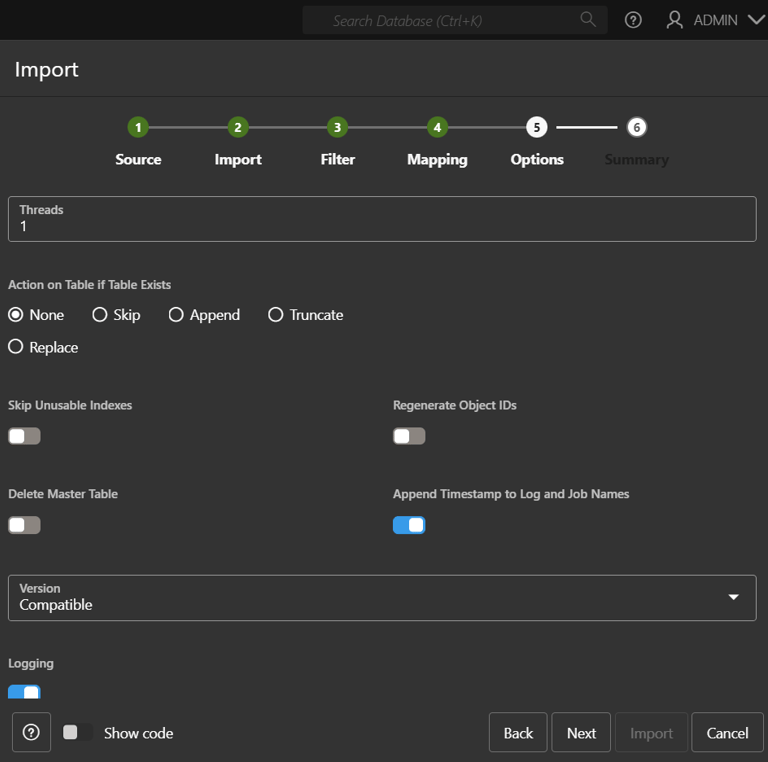

In the options page, refine the import job as necessary by editing the 'Threads' parameter to parallelize your job and improve performance. Of course, this is dependent on the resources allocated. You can also edit 'Action on Table if Table Exists', logging etc. Click Next.

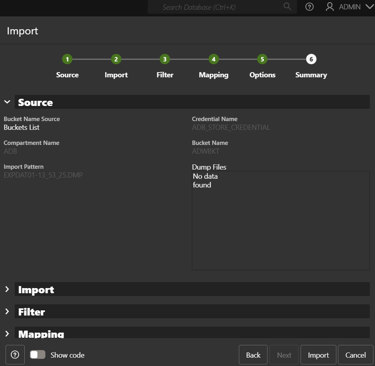

View the summary of your actions and if this is fine, click 'Ímport' to start the import job.

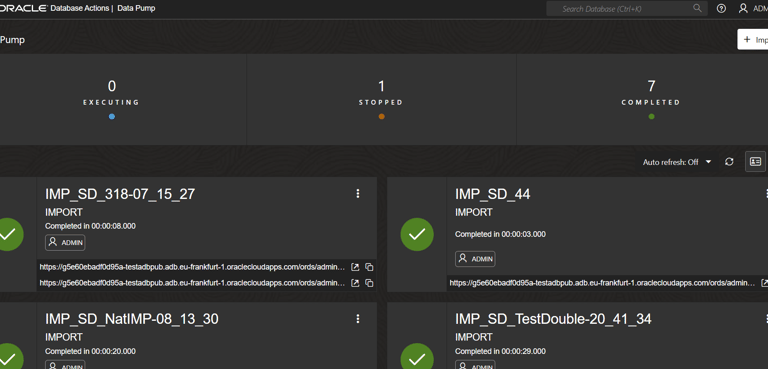

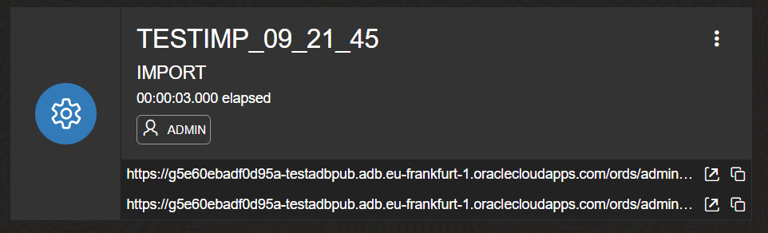

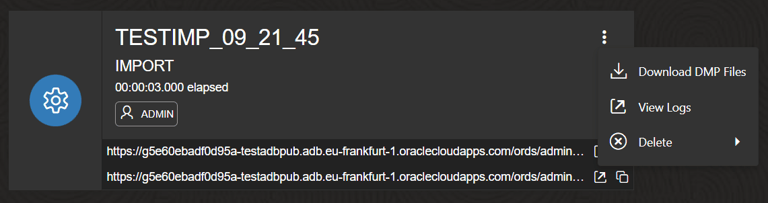

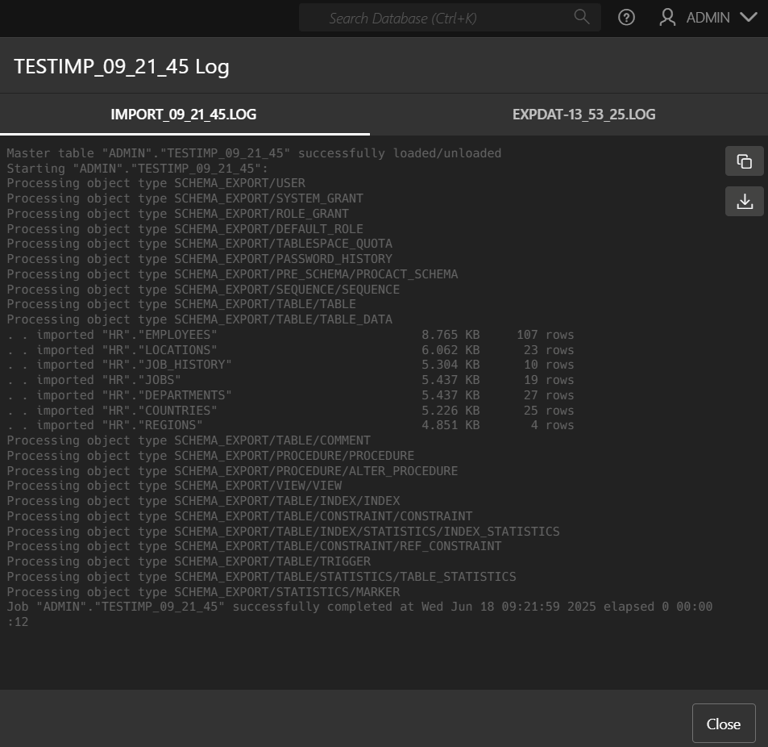

You can monitor your jobs on the dashboard. You can see if the job is running, how long it's been running and the log files after completion etc.

Hope this helps.

Console Data Pump Import Wizard

Using the Console UI to Import Data into an Autonomous Database

Austine

12/27/2024